Conquer the Slow: How to Accelerate Your Apps With a CDN

Originally published on March 14, 2017 by Matt Conran

Originally published on March 14, 2017 by Matt Conran

Last updated on January 23, 2024

•

18 minute read

This article is a guest post by Matt Conran of Network Insight, and it's the first in a new series about Content Delivery Networks (CDNs). In this post, Matt covers the reasons why application performance on the Internet is often unacceptably slow, and how CDNs vastly improve the user experience. Read here to learn why you must make CDNs a critical part of your website strategy.

Introduction

We are witnessing an era of hyper-connectivity with everything and anything getting pushed to the Internet to take advantage of its global footprint. A lot of content and Internet-based applications are publicly available, yet the Internet is bundled with complexities and shortcomings. The fact that we are building better networks does improve matters but there are still plenty of challenges.

Challenges arise from the way Internet Service Providers interconnect, with how global routes are (mis)managed, and with the underlying protocols that build the fabric. The Internet is full of bufferbloat, asymmetric routing and other performance related problems. Packets do not have a coordinated flight path and users in different locations experience varying levels of services. Distance is one of the leading factors which affect application performance. The further you are from content, the poorer the performance.

The following sections highlight key motivations for employing Content Delivery Networks (CDNs). There have been many changes to the applications and everyone has high expectations. The issue is that the foundation of the Internet is not performance-oriented, which forces us to employ techniques and tools to enhance users' experiences.

Motivations

New Era of Application

The application stack has changed rapidly. We are no longer tied to single monolithic application per server environments. Microservice multi-tiered applications with load balancing and firewalling between tiers are geographically dispersed in multiple locations around the globe with a distributed user set.

Scattered Users

If all users were in one place and didn't move, we could just have a central location for ALL content and services, but this is clearly not the case. Having all content in a single place with users requesting from a variety of locations does not serve any purpose. It's like having the same stamp for the entire world. Users are distributed everywhere and they rely on global reachability for service and application interaction.

Global Reachability

Global reachability means if you have someone's IP address then you can access them directly. PING is a common way to test reachability, to determine that the requested host can accept requests. The physical location is irrelevant for PING connectivity, but it has a large impact on connection performance. This is the model of the Internet. It changed slightly over the years, but the principles of global reachability stay with us.

Inefficient Protocols

Unfortunately, the protocols that form the Internet's fabric were designed without performance in mind. No one is to blame, it's just the way things are.

Border Gateway Protocol (BGP), the protocol that glues the Internet together, does not by default take link performance into consideration. It uses AS-Path (Autonomous System Path) as its metric, ignoring the performance shape of the Internet. Path selection is based on the number of ASs. AS count is not a good indication of the best performing path and may lead to traffic going over links with a low AS count but a high rate of packet loss or latency. Additions to BGP were added later, but these just add to network complexity and are not rolled out on a global scale.

TCP, the primary transport protocol of the Internet, starts a connection thinking it's on a private dark fiber wire, which is clearly not the case. Its inbuilt mechanisms do not assume packet loss, high latency and jitter. It presumes a perfect network with no packet loss and low latency and works back from there. This is the opposite to what it should do.

As it stands, the Internet by default is not performance optimized yet everything and anything is getting pushed to the Internet. It has become an integral part of our culture and shaped the way we communicate.

High User Expectations

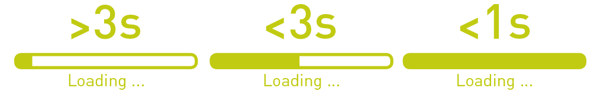

Users want seamless access to content from a variety of device types and locations without any performance degradation. Their patience is decreasing, and if loads take more than a couple of seconds, their thought process has changed, and they move to a different line of thought. Slow page loads and video buffering is not acceptable, and everyone has high expectations. Optimum application performance is a must for today's user base. There are plenty of challenges to application performance on the Internet -- the long distances and high latency are the key ones.

Latency is Never Zero!

No matter how advanced technology and interconnect models become, there will always be latency. Latency is never zero! It takes about 100ms for light to circle the earth. So why do we experience latency higher than 100ms from two points that are not at opposite sides of the world?

Most latency is not a function of the speed of light but of the processing times incurred at the devices between endpoints. The hops add the latency that we see in the paths; each hop adds a little bit to latency, thereby increasing the overall end-to-end latency. Individual device processing causes it, and since everything needs to be processed, many factors contribute to latency.

You can't change the speed of light!. As data travels from one point to another, it travels as light in a fiber. However, this is not the only issue causing latency. We have many other challenges such as buffer problems, secured communications, TCP inbuilt congestion control and connection setup.

Throwing money at the problem by buying more bandwidth won't fix latency. Bandwidth and latency are two different things. Bandwidth refers to how much data you can move in a given period. Latency is a measure of the time it takes to complete a task. Their differences lead to different styles of applications being more suited to high/low bandwidth or high/low latency environments.

Latency vs Applications

Applications are categorised by how they react to high and low latency. Applications such as Netflix are more concerned with high bandwidth than low latency. The pre-downloading of videos to the local machine masks a lot of the problems that cause drag and delays in the video display.

Low latency is crucial when the timely delivery of information is more important than the quantity of information. Latency is most notable, for example, on websites where you can't click on an asset as the page is still rendering, for a gamer experiencing lag during an online multiplayer game, or when it takes a few seconds for a news reporter on a satellite phone to respond to questions from the central office. These issues are all due to latency added by different processing points in the network path. And, depending on the application type, it may cause pain points and overall degradation of user experience.

Even if the application can handle high latency, you should always strive for stable low latency communications. Latency will always exist -- you can't get away from it. But you can employ techniques to get it down to a level that the application can support and run efficiently.

TCP & Latency

Latency also affects the TCP conversation setup with its 3-way handshake. TCP works at the transport layer of the OSI Model. It allows endpoints to link together by creating a connection that must be set up before data can pass and for example, a webpage can be viewed. The process of establishing the bi-directional channel all happens at the transport layer with TCP.

How it Works

There are a couple of steps involved. The first step is usually a DNS lookup, but that is quick, done in less than 1ms. The DNS lookup is rarely a performance problem, except when there's a DNS attack.

● The host initiating the connection sends an SYN request. The SYN request is a signal to talk and is described as a synchronization packet.

In the SYN there is a sequence number, TCP window size (receive buffer size) and max amount of bytes (max segment size) per packet. The max amount of bytes (max segment size) is the maximum number of bytes we can put in a packet.

● The remote endpoint receives the SYN and responds with a SYN + ACK (acknowledgement).

● The originator host receives the SYN + ACK and responds with an ACK packet.

RTT & Congestion Window

The TCP connection setup requires a number of round-trip times (RTTs) to get from the client to the server and back. This increases the latency before any data is sent between the two endpoints. There is another RTT for the HTTP request and the HTTP response. Again we have two RTT before the first byte of data.

And then the TCP congestion window kicks in. The initial congestion window is, by default, three packets - 3 x 1460 bytes which are about 4.5k of data. As a result, in the first response, the server can only send 4.5k of data at the expense of another RTT.

Help is at Hand!

There are many things you can do in the browser, content, network and server layers to aid this. The mechanisms are well-documented at IPSpace.

The main areas of interest are to make fewer HTTP requests, to minimize cookies that are sent with every request, to cache content and to use a CDN, so that static content is closer to the users!! Moving static content geographically closer to users is a natural way to reduce latency and improve the user experience.

Physical Proximity

While bandwidths are increasing and getting cheaper by the year, there is nothing we can do about RTT and latency. You can't decrease latency by throwing more bandwidth at the problem, and you can't buy latency with euros! While there are plenty of metrics that affect application performance, aside from packet loss, latency is the worst, and there is not an awful lot we can do about it. There are some purpose-built devices designed to decrease latency but in many cases, they do the opposite and end up increasing it.

One of the major factors affecting application performance by high latency is physical proximity. The further away users are from the requested content, the higher the RTT, and there is nothing you can do about this unless you change the speed of light. There are different response times from various locations, so you should aim to put content close to the user -- right under their noses if possible. A user accessing content over a cross-continental link will surely have better performance if they can access the content locally in their region. Requests that need to cross regions or even continents must cover long distances, resulting in higher latency.

Addressing all these problems to attain peak application performance isn't an easy task and would involve changing the underlying fabric and protocols of the Internet. This will not happen anytime soon.

CDN to the Rescue

However, we are seeing new ways of doing things at the edges with various optimization techniques and protocol revisions, for example, HTTP/2. All these are a step in the right direction, but ultimately distance and latency will always be the major performance factor.

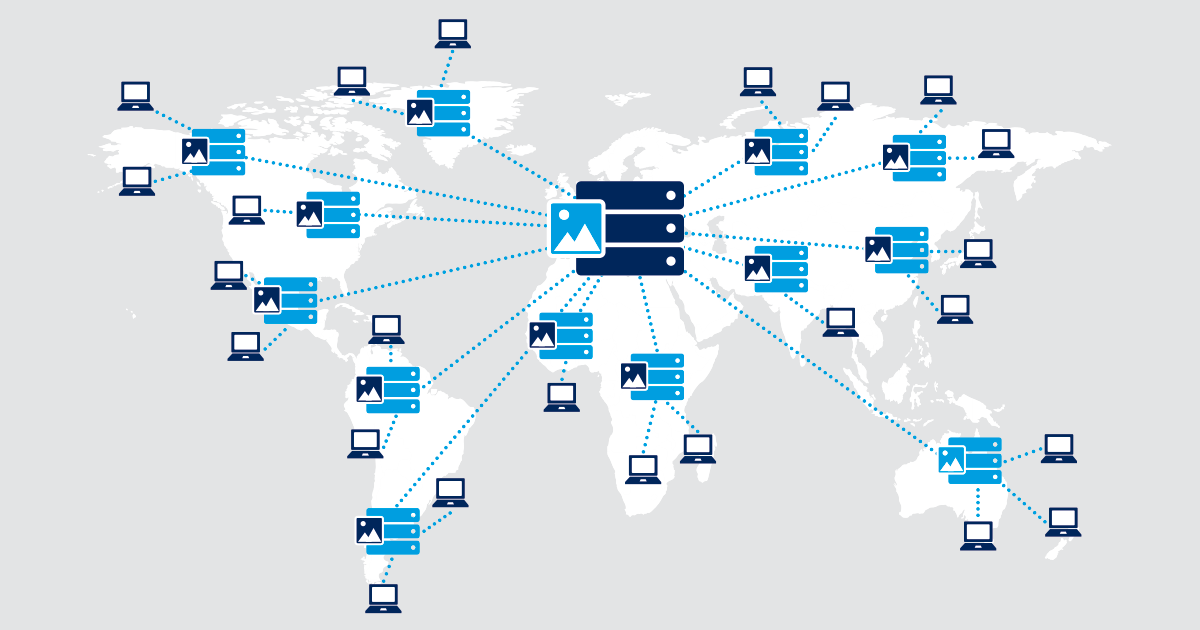

This is where the workings of a CDN and their local PoPs come into play! It is certainly easier to deploy a CDN than to try to reinvent the core of the Internet and all of the underlying protocols. Not only would these protocols first need to be invented, but they would require global interoperability and agreements between all providers!

Stay tuned for more! This article is the first in a series about content delivery networks. The other articles are:

Want to know more?

Here are some additional links you might find interesting:

- Matt Conran's Network Insight Blog

- Ivan Pepelnjak's ipSpace Blog

- Paessler's Cloud Ping and Cloud HTTP Sensors